Background

In the Fall of 2022, I had taken the CS528-NLP and as part of this course, we supposed to work on course projects. I will using this post to document my learning on Question Answering Systems.

As part of this project we try explore the diffrent benchmarks as part of SQuAD 2.0 dataset.

Notes from Chapter 23- Question Answering

In following section, lets look into the details given about Question Answering systems from Speech and Language Processing book by Dan Jurafsky.

Blogs I would like to explore -

She uses sentence embeddings, to find similarity with context & question. She also explores stratergy, parse tree of a sentence is used for getting the answers. And also unsupervised stratergies mentioned.

Again uses attention vectors in LSTM & explores BiDAF model

Explains the 3 diffrent types of QA systems. She talks about 3 stratergies where - Compress all the information in the weight parameters. T5 model here - For very long context (databases of documents), here we find top-k documents.

Im yet to read this and it probably uses CNN.

Papers/repo I would like to explore -

- Question Answering on SQuAD

- Probably uses huggingace directly for QA

- CS 224N Default Final Project: Question Answering

- Approach for SQUAD 2.0

- Original SQUAD2.0 paper

Short Notes on Bidaf Model

Paper was introduced in 2016 inorder to solve tasks that query from long context words(Machine Comprehension or Question Answering) and uses attention mechanism to approach the problem.

Important Highlights mentioned in Abstract section -

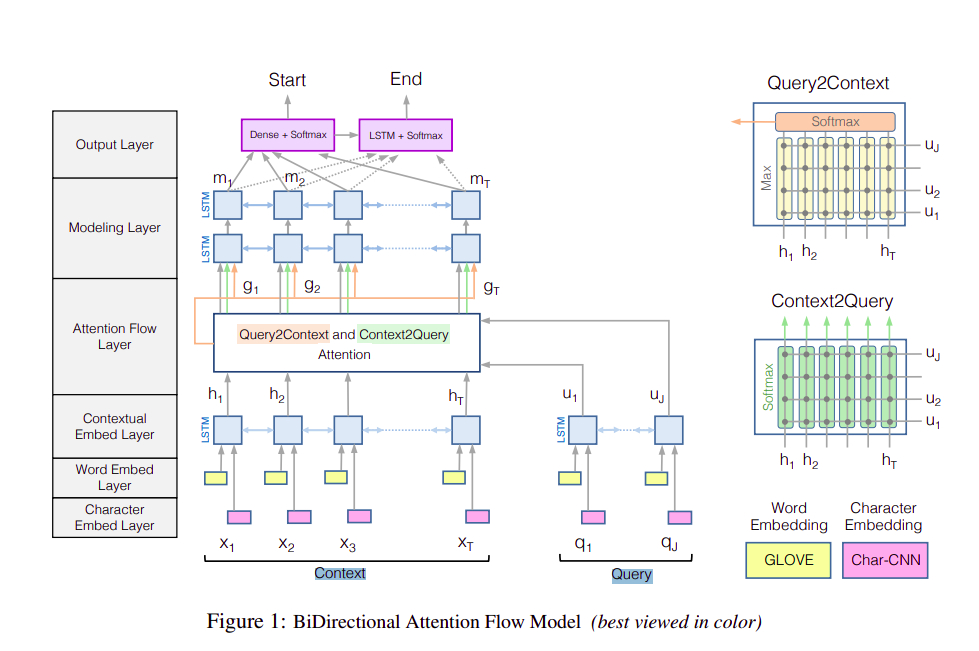

BIDAF includes character-level, word-level, and contextual embeddings, and uses bi-directional attention flow to obtain a query-aware context representation

Our experiments show that memory-less attention gives a clear advantage over dynamic attention

we use attention mechanisms in both directions, query-to-context and context-to-query, which provide complimentary information to each other.

Model

As seen per the figure we pass the model through two seperate backbone networks for each Context and Query sentences. This Backbone in the bottom half uses three seperate encoding mechanism - Character Embedding Layer, Word Embedding Layer & Contextual Embedding Layer